Several spatiotemporal feature point detectors have been recently used in video analysis for action recognition. Feature points are detected using a number of measures, namely saliency, cornerness, periodicity, motion activity etc. Each of these measures is usually intensity-based and provides a different trade-off between density and informativeness. In this paper, we use saliency for feature point detection in videos and incorporate color and motion apart from intensity. Our method uses a multi-scale volumetric representation of the video and involves spatiotemporal operations at the voxel level. Saliency is computed by a global minimization process constrained by pure volumetric constraints, each of them being related to an informative visual aspect, namely spatial proximity, scale and feature similarity (intensity, color, motion). Points are selected as the extrema of the saliency response and prove to balance well between density and informativeness.

|

|

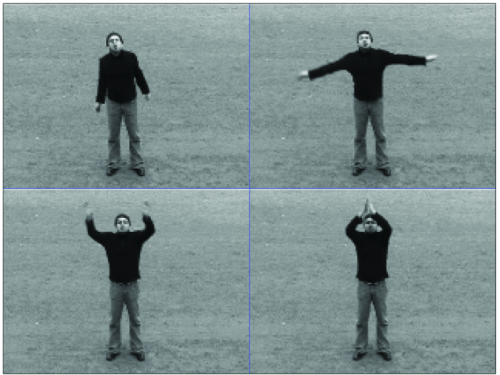

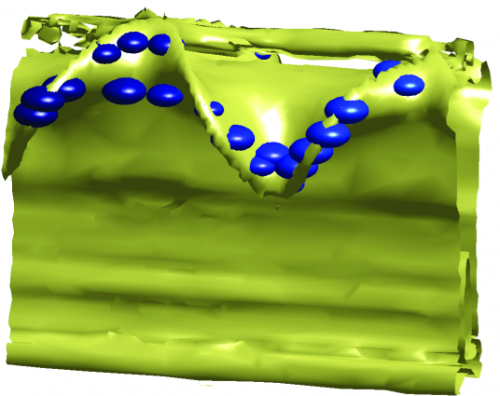

Indicative slices of a handwaving sequence and the ISO surface with the detected points overlaid.

|

|

||

|

|

||

We propose a novel spatiotemporal feature point detector, which is based on a computational model of saliency. Saliency is obtained as the solution of an energy minimization problem that is initiated by a set of volumetric feature conspicuities derived from intensity, color and motion. The energy is constrained by terms related to spatiotemporal proximity, scale and similarity and feature points are detected at the extrema of the saliency response. Background noise is automatically suppressed due to the global optimization framework and therefore the detected points are dense enough to represent well the underlying actions. We demonstrate these properties in action recognition using two diverse datasets. The results reveal behavioral details of the proposed method and provide a rigorous analysis of the advantages and disadvantages of all methods involved in the comparisons. Overall, our detector performs quite well in all experiments and either outperforms the state-of-the-art techniques it is compared to or performs among the top of them depending on the adopted recognition framework.

IVA

IVA