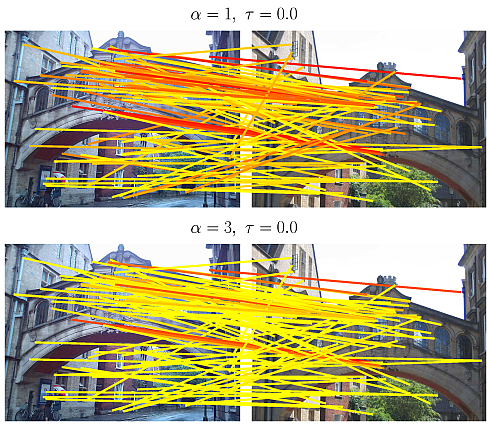

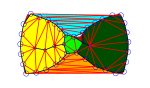

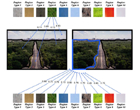

State of the art data mining and image retrieval in community photo collections typically focus on popular subsets, e.g. images containing landmarks or associated to Wikipedia articles. We propose an image clustering scheme that, seen as vector quantization, compresses a large corpus of images by grouping visually consistent ones while providing a guaranteed distortion bound. This allows us, for instance, to represent the visual content of all thousands of images depicting the Parthenon in just a few dozens of scene maps and still be able to retrieve any single, isolated, non-landmark image like a house or a graffiti on a wall.

IVA

IVA  IVA

IVA